Frank Schneider

Postdoc | University of Tübingen | Methods of Machine Learning (MoML)

Hi, I’m Frank! I’m a postdoctoral researcher at the University of Tübingen in the Methods of Machine Learning group, led by Philipp Hennig. I’m also a chair of the Algorithms working group at MLCommons.

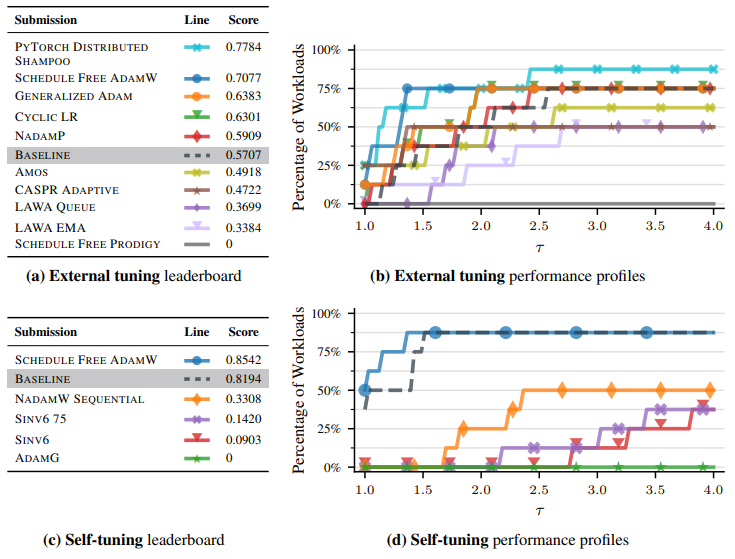

My research focuses on efficient and user-friendly training methods for machine learning. I’m particularly interested in eliminating tedious hyperparameters (e.g., learning rates, schedules) to automate neural network training and make deep learning more accessible. A key aspect of my work is designing rigorous and meaningful benchmarks for training methods, such as AlgoPerf.

Previously, I earned my PhD in Computer Science from the University of Tübingen, supervised by Philipp Hennig, as part of the IMPRS-IS (International Max Planck Research School for Intelligent Systems). Before that, I studied Simulation Technology (B.Sc., M.Sc.) at the University of Stuttgart and Industrial and Applied Mathematics (M.Sc.) at TU/e Eindhoven. My master’s thesis, supervised by Maxim Pisarenco and Michiel Hochstenbach, explored novel preconditioners for structured Toeplitz matrices. This thesis was conducted at ASML (Eindhoven), a company specializing in lithography systems for the semiconductor industry.

News

| Nov 2025 | 🗣️ I will be giving an invited talk at the Workshop on Distributed Training in the Era of Large Models at KAUST, in Thuwal, Saudi Arabia. |

|---|---|

| Jul 2025 | 🗣️ I am giving an invited talk at ICCOPT in Los Angeles about my work on AlgoPerf. |

| Mar 2025 | 🤝 We are organizing the CodeML Workshop at ICML 2025 in Vancouver focusing on open-source software in machine learning! |

| Mar 2025 | 📝 Our paper “Accelerating Neural Network Training: An Analysis of the AlgoPerf Competition” has been accepted at ICLR 2025! See you in Singapore! |

| Feb 2025 | 🤝 We are organizing the first AlgoPerf Workshop at Meta HQ in Menlo Park on February 11th & 12th. |

Selected Publications

2025

2023

2021

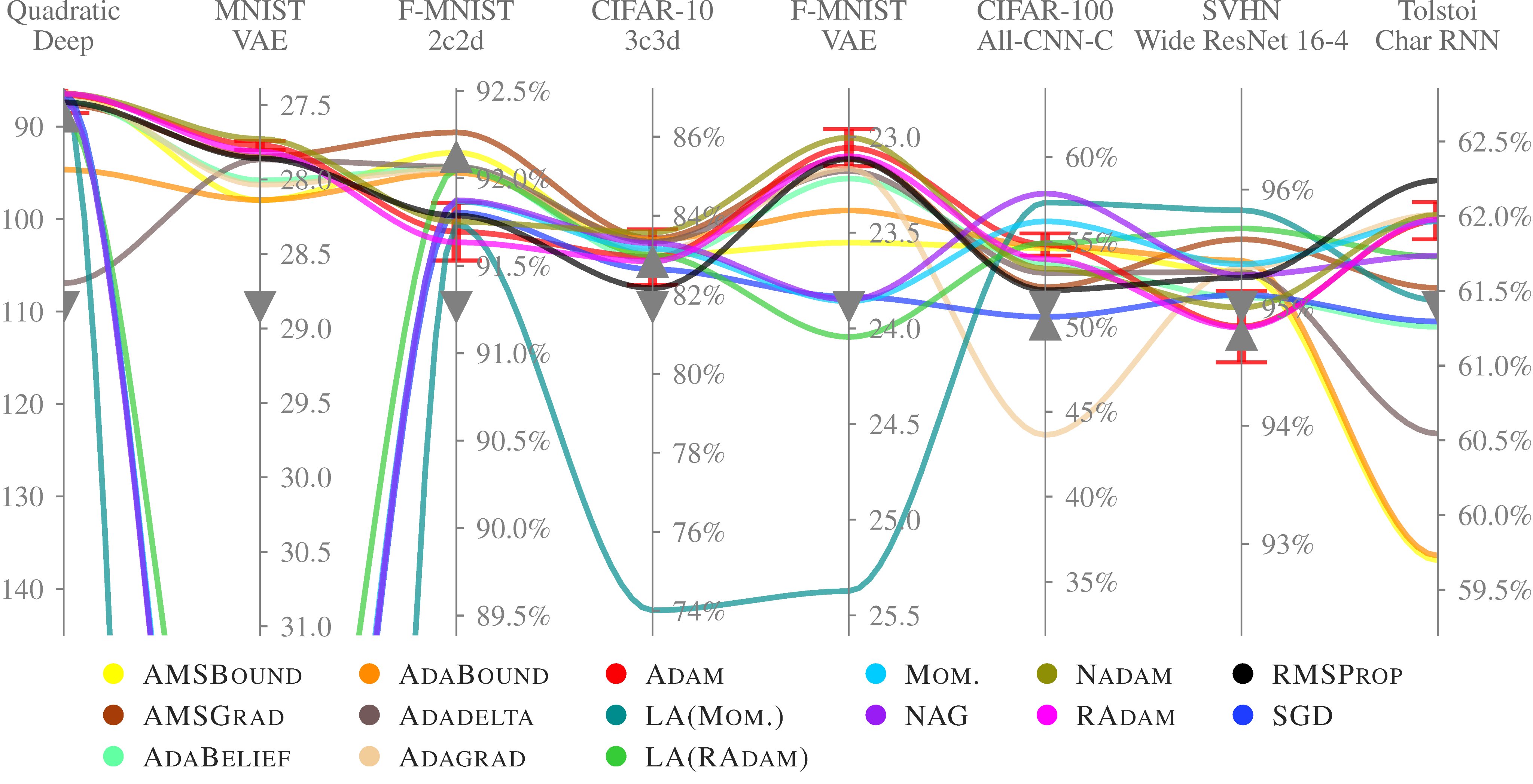

- Cockpit: A Practical Debugging Tool for the Training of Deep Neural NetworksIn Neural Information Processing Systems (NeurIPS), 2021We introduce a visual and statistical debugger specifically designed for deep learning helping to understand the dynamics of neural network training